Matey Video Demo - DUI Case with Voiceover

The video is a quick demonstration of Matey, showcasing how attorneys can review evidence, such as in a DUI case, in minutes.

.jpg)

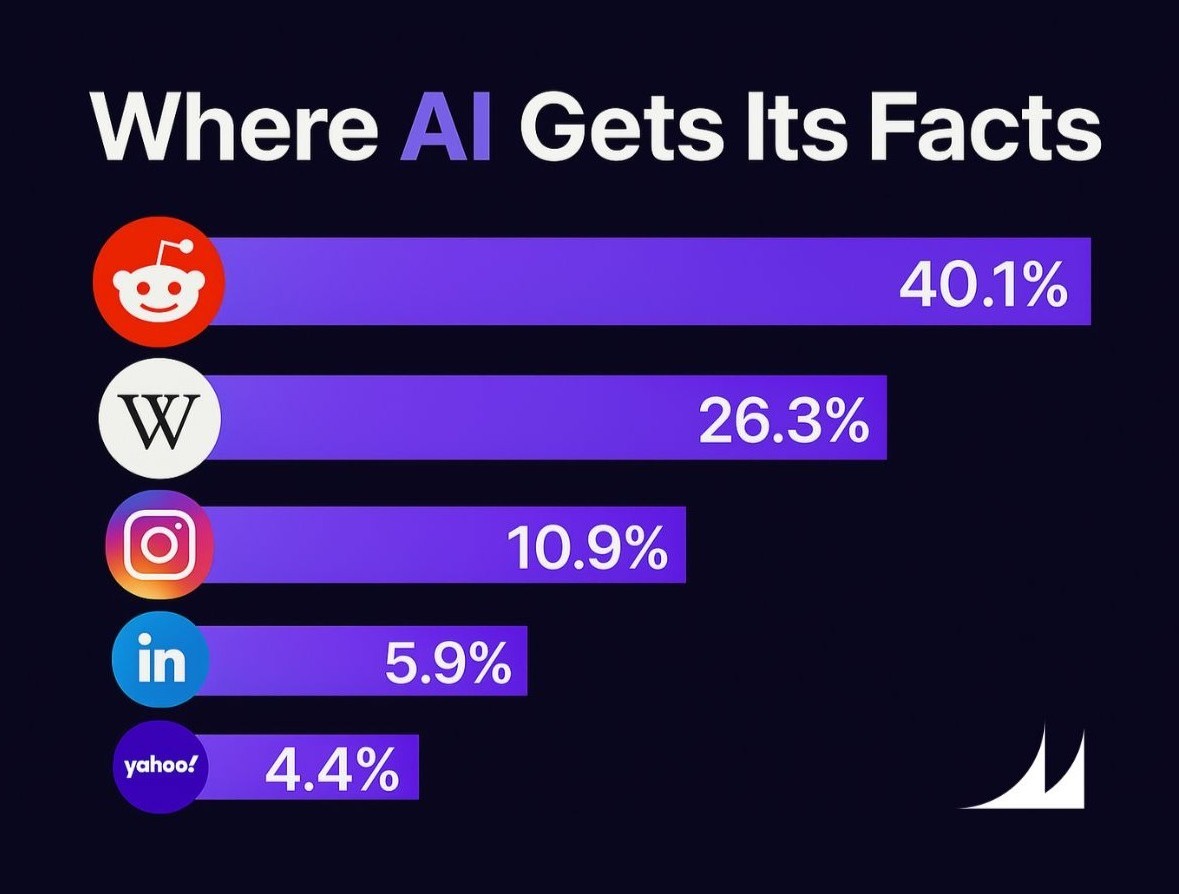

If you’ve ever wondered where AI gets its information, you’re not alone. A recent breakdown shows that most AI tools pull heavily from public online sources. Here are a few of them:

That’s right - much of what fuels the world’s most popular AI models comes from social posts, public forums, and user-edited sites. While this may be fine for brainstorming or casual questions, in the legal world, it’s a red flag.

When AI draws from unverified sources, it can confidently produce information that’s simply wrong. In legal contexts, this isn’t just inconvenient - it’s dangerous. Whether preparing discovery summaries, drafting motions, or analyzing transcripts, a hallucinated “fact” can waste hours, mislead a client, or worse, erode your credibility in court.

Lawyers and paralegals don’t just need answers - they need evidence-backed, reviewable answers. That’s why safe AI must be built differently:

You can’t rely on Reddit to prepare a case brief or verify a witness statement. You need an AI that understands legal context, pulls from trusted records, and keeps you in control of every output.

Matey was designed with court confidence in mind. Instead of scraping public web content, Matey connects directly to your trusted documents and transcripts, identifies what matters, and keeps a full audit trail of how it got there. You always know:

Because in legal work, speed matters - but trust matters more.

The internet can make AI sound smart. But in the courtroom, only transparent, verifiable AI keeps you credible. So before you ask an AI for answers, ask yourself: Where does it get its facts?